Energy statistics nowcasting Challenge - The second round of the European Statistics Awards for Nowcasting

Lately, the European energy market has been unusually volatile. Policymakers and other users of European statistics often stress the need for improved timeliness. In the Energy Statistics Nowcasting Challenge, teams will compete using advanced modelling techniques and (nearly) real-time auxiliary data to come up with nowcasts for key energy time series way ahead of the official figures. The nowcasts that are closest to the official European statistics will be rewarded for accuracy, and the team that best document their nowcasting models will win the reproducibility award.

The second round of European Statistics Awards for Nowcasting will begin in June with registrations open until 28 September 2023.

Timeline

The competition will begin on 1 June 2023 and will run for ten months until 31 March 2024. The deadline for registration is 28 September 2023.

Time series

Monthly energy statistics on:

- Inland gas consumption

- Oil and petroleum deliveries

- Electricity availability

Awards

Accuracy Award

First Prize EUR 3 000

Second Prize EUR 2 000

Third Prize EUR 1 000

Reproducibility Award

First Prize EUR 5 000

Teams

Teams comprising a maximum of five individuals with diverse backgrounds and expertise in time series analysis, forecasting, or nowcasting are eligible to participate in the competition. This contest presents an exceptional chance to apply your understanding of econometric time series modelling in an actual context and potentially receive up to EUR 8 000 per nowcasted time series. If your team secures the top spot for all three time series, you could earn up to EUR 24 000 in this round.

First round of the european statistics awards for nowcasting

The winners of the nowcasting challenge have been announced

The first round was divided into 3 separate competitions, each focusing on the nowcasting of one economic indicator (PPI, PVI, Tourism), aimed at discovering promising methodologies and potential external data sources that could, now or in the future, be used to improve the timeliness of key EU economic indicators.

Frequently asked questions

I am not from Europe - can I still participate?

Yes, the European Statistics Awards for Nowcasting are open for participants from all continents. Please refer to the eligibility overview sections of the competitions:

- Energy Nowcasting Challenge - GAS

- Energy Nowcasting Challenge - OIL

- Energy Nowcasting Challenge - ELECTRICITY

Where are the data that I should use for nowcasting?

Unlike a traditional hackathon, teams do not get any particular data to use for the European Statistics Awards for Nowcasting. To edge out your competitors, you are free to use whichever auxiliary data you think are the most predictive ones – but finding them will be a challenge!

Can I submit nowcasts for European aggregates as well as for countries?

You can only submit nowcasts for countries. To maximise your chances to win, we encourage you to submit for as many countries as possible.

Can our first point estimate submission be for e.g. August 2023 or October 2023 or are we obliged to submit point estimates for June 2023?

You are not obliged to make a submission for June 2023. Your first submission can be as late as October 2023. However, you are strongly encouraged to make your submissions as soon as possible as this will increase your chance at success and lower the probability that a submission will be invalid.

Can I change my model?

To get a higher accuracy score, you might modify the model that you use for an entry. Please note, however, that if you are in the running for the reproducibility awards, then the best integrity scores are awarded if you consistently apply the same model.

How do I make a submission?

In order to make your submission, create a zip file containing

- the point_estimates.json file, and

- the accuracy_approach_description.docx file.

Zip the files directly, not in a folder.

Next,

-

navigate to “Participate > Submit / View Results”,

select the corresponding "Reference Period", and -

click the “Upload a Submission” button.

This will open your file explorer, from where you can select the zip file you wish to upload.

The system returns an error in case you are uploading the json file instead of a zip file.

I am trying to upload my json files and receiving an error. Why?

The system returns an error in case you are uploading the json file instead of a zip file. In the zip file, the files have to be directly in the root, not in a subfolder.

In addition, the returned error shows how the zip file should be structured.

For further details, see how to submit.

Is it a must to submit the result in JSON?

Yes, the submission must be made in JSON format – but the JSON files must be embedded in a zip file.

For further details, see how to submit.

Does the Accuracy_Approach_description file need to be uploaded every time while submitting a result?

Yes – each monthly submission must be accompanied by an Accuracy_Approach_description file – but you do not need to change it between submissions if you are using the same approach. If you adjust your method between submissions, then the Accuracy_Approach_description file should be updated to reflect this.

Do you expect participants to deliver a description of the approach together with the submission of the first point estimate or is it possible to deliver the description of the approach with the submission of a latter point estimate?

Yes – In order to be eligible to compete for the Accuracy award, each monthly submission must be accompanied by an Accuracy_Approach_description file that outlines the approach. You do not need to change it between submissions if you are using the same approach. If you adjust your method between submissions, then the Accuracy_Approach_description file should be updated to reflect this.

I made multiple submissions for a particular reference period. Which submissions will enter the competition? The latest, the best, or all of them?

Only the last submission counts – it supersedes all previous submissions.

What does the performance ranking indicate?

Before the first release of European statistics for a given reference month, the performance ranking

- indicates, for each team, the number of entries made during that month

- is ordered by the time at which the last submission was made (and is thus completely unrelated to the forthcoming accuracy score of the team).

Once the European statistics have been released (and the entries have been evaluated) for the time series in question, the performance ranking

- is updated for each team to reflect the performance of the best entry (out of the up to five different entries) of the team for that month only

- is ordered by the performance of the best entry for that particular month

- is not cumulative, and hence only loosely related to the final accuracy score (even in case a team ranks first for all months, this may stem from different entries, so another team altogether might win the first prize for accuracy)

When can I expect an answer to the question I submitted by e-mail?

Questions can be sent to the competition management team (at info@statistics-awards.eu) at any time during the competition. All questions will be answered in a timely manner. Those relevant for other competitors will be added to the FAQ. However, in order to avoid bias, questions that arrive 3 days prior to each submission deadline date will be answered after the deadline. Following each monthly deadline, the answering of questions will resume normally.

If I need urgent help with a submission issue, when can I expect an answer?

Specific submission issues can be reported to the competition management team (at info@statistics-awards.eu) any time during the competition. However, please note that the mailbox is only monitored on a best-efforts basis during working hours on regular working days (thus excluding any public holidays in Slovenia; see https://www.gov.si/en/topics/national-holidays/).

While every effort will be made to support teams with their submissions, late response by the competition management team cannot be invoked as a mitigating circumstance in case a team fails to achieve a submission within the time limits. Teams are thus encouraged to do whatever they can to avoid last-minute submission problems.

How can my team avoid last-minute submission problems?

Teams are also encouraged to familiarise themselves with the submission interface and submission requirements, and to (at least for the first reference period) make a mock submission to ensure that their submission is correctly structured and transmitted. This mock submission will be superseded by the “real” submission of the reference period.

Moreover, as support is not provided on a 24/7 basis, teams are encouraged to make their submissions early, well ahead of the deadline.

Glossary

DISCLAIMER: The aim of this glossary is to provide persons considering whether to compete for the European Statistics Awards for Nowcasting with an overview of some of its essential elements to allow them to assess whether it would be in their interest to form a team to submit entries. Please refer to the ‘learn the details’ tab of each individual competition description for authoritative information on the rules, terms and conditions for the competitions of the European Statistics Awards Programme.

Accuracy Awards

All entries are assigned an accuracy score. The team with the entry having the best accuracy score will win the 1st accuracy award (The 2nd and 3rd accuracy awards will be granted similarly).

Accuracy score

For each entry, the accuracy score is calculated as the sum of the five best country scores of that entry. In case there are fewer than five valid country scores for the entry, it is not eligible to compete for any awards.

Code

In order to compete for the Reproducibility Award, teams will be required to submit their programming code which was executed to predict the point estimates for a particular entry.

Completeness

of documentation

This is an eligibility criterion for the Reproducibility Award. Incomplete entries will not be evaluated with respect to the Reproducibility Award criteria.

The evaluation panel will check the documentation of each entry and assess the completeness of its individual key elements, including:

- procedure description

- input data

- code (including inline comments)

and other documentation.

Country score

The country score of an entry is based on

- the volatility index for the country multiplied by

- the MSRE for that country across all monthly submissions of that entry.

An entry will receive a better country score if it has a small MSRE.

The volatility index (which has a lower value the more volatile the statistics in a particular country) allows teams to select more volatile countries (which will naturally result in higher MSRE values) and remain competitive for the Accuracy Award.

There must be a mean square relative error (MSRE) of less than 15% across all monthly submissions of an entry in order for a valid country score to be generated for that country for the entry in question. In some specific situations like the overall poor performance of models for a particular date, the evaluation panel may decide to increase this cut-off threshold.

Only countries for which there are at least 6 submissions are taken into account. In case there are more than 6 monthly submissions made for a country, the six submissions that generate the best country score will be used for the calculation.

Documentation

For entries competing exclusively for the Accuracy Awards, it suffices to submit a short description of the approach used to arrive at the submitted point estimates. For an entry to also be in the running for the Reproducibility Award, complete documentation of the entry is required at the end of the competition.

Entry

An entry is made of a submission of point estimates (i.e. nowcasted values) for at least 5 countries using a given approach.

Each team may submit up to 5 different entries, and thus submit 5 different nowcasts. Teams will have to take care to keep their entries separate, since each entry is evaluated in isolation.

Integrity

of the approach

This is one of the five award criteria evaluated for the Reproducibility Award.

For an entry to be eligible for evaluation, at least 6 separate monthly submissions must be made for that entry for 6 different reference periods (months). Furthermore, to score high on the integrity criterion, the same approach should be consistently used for all submissions of an entry.

An adaptative approach based on the adjustment of (super)parameters taking contextual information into account is permissible. The compliance with the integrity criterion (and the extent to which model parameters change can be linked to contextual information) will be assessed by the panel when evaluating entries for this criterion.

Teams that are interested in competing for the Reproducibility Award should therefore foresee different entries when implementing different approaches.

Interpretability

of the approach

This is one of the five award criteria evaluated for the Reproducibility Award.

For each entry, the evaluation panel will assess the approach’s interpretability, i.e. the extent to which a human could understand and articulate the relationship between the approach’s predictors and its outcome.

Performance Ranking

The monthly performance ranking will be posted and indicate the performance of the different entries of the teams for each month separately over the course of the competition. For each European statistics value, the monthly performance ranking will be updated once the official release is available for all countries (thus with a lag in relation to the reference period). The performance ranking is purely cross-sectional and does not take the cumulative performance over time into account. The performance ranking does thus not indicate the final ranking, which will be calculated during the evaluation phase of the competition based on 6 best nowcasts of each entry.

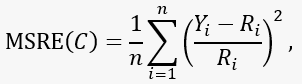

Mean Square Relative Error (MSRE)

Mean square relative error (MSRE): average of the square of the relative differences between the point estimate (i.e. the nowcasted value) (Yi) and the first official release (Ri). Better nowcasting approaches are those where the MSRE is closer to zero.

C is the country in the provided entry and n is the number of different estimation periods.

In case there are more than 6 monthly submissions made for a country, the six submissions that generate the best country score will be used for the calculation.

Nowcast

A nowcast is a point estimate of a European statistics value, provided before the end of the reference period that the value refers to.

Openness

of the input data

This is one of the five award criteria evaluated for the Reproducibility Award.

The evaluation panel will assess the possible external data used for each entry with respect to their openness, availability, coverage (for instance geographical and time coverage) and consistency.

The general task of the evaluation panel when providing a score for this criterion is to assess whether there would be any major obstacles to scaling up an entry (typically made for a few countries and a certain time period) to all (or most) countries of the European Statistical System.

Originality

of the approach

This is one of the five award criteria evaluated for the Reproducibility Award.

For each entry, the evaluation panel will compare the approach used to the state-of-the-art, i.e. those pre-existing approaches that are closest to the approach applied for the entry, and the extent to which the entry represents an improvement over these pre-existing approaches.

The evaluation panel will assign higher marks to entries that are bringing novelty with respect to existing approaches, and lower marks to entries that rely on "baseline state-of-the-art" approaches.

Panel

of evaluators

For each European Statistics Awards competition, a panel of independent expert evaluators has been appointed. The evaluation panel is responsible for rating each entry with regard to its accuracy score and reproducibility score.

On the basis of the scores, the European Commission (Eurostat) decides on the awarding of the Reproducibility Awards and Accuracy Awards.

The evaluation panel is at liberty to set the following additional criteria during the competition:

- In the case that European statistics are not published for a particular country in due time, the evaluation panel is at liberty to set a cut-off date for the beginning of evaluation. If European statistics have not been published by the cut-off date, all entries containing a point estimate for that country will be treated as invalid for that month. Teams are therefore encouraged to submit point estimates for more than 5 countries as well as make more than 6 submissions, thereby reducing the risk that their submissions become invalid due to a lack of publication of a European statistics value.

- Based on all the entries received by the final deadline, the evaluation panel may choose to increase the 15% mean square relative error (MSRE) cut-off threshold required for a country score to be valid.

Reproducibility Awards

Eurostat is assigning great importance to approaches having a potential to be scaled up for use in European statistics production. Therefore, entries for which teams submit additional documentation will be in the running for the Reproducibility Award.

The entry with the highest reproducibility score will receive the Reproducibility Award.

However, for each European statistics value, only those entries are considered that:

- are in the best quartile with regard to their accuracy score (since there is expected to be little interest in reproducing underperforming models);

- have a reproducibility score above the minimum cut-off – both overall and for each individual reproducibility criterion. The minimum cut-off is provided in the Evaluation section of each competition.

If there are no such entries (best quartile) for a given European statistic value, then no reproducibility prize is awarded.

Reproducibility Score

The reproducibility score of an entry will be evaluated by a panel of expert evaluators, who will rate the entry according to its integrity, openness, originality, interpretability, and simplicity.

The key eligibility criterion for evaluation is that the team provides complete documentation, as stated in the Evaluation section of each competition.

Simplicity

of the approach

This is one of the five award criteria evaluated for the Reproducibility Award.

For each entry, the evaluation panel will evaluate the approach used with respect to its predictive validity and assess the distinction between simple, comprehensible approaches and more complicated, non-linear approaches.

A higher score will be assigned to approaches which use parameters that are explainable and can be linked to assumptions made when developing the approach.

Submission

of nowcasts

For each entry, at least six (6) monthly submissions of nowcasts over ten (10) months are required. Each submission consists of a single point estimate for at least 5 countries for the European statistics concerned – to be submitted via the competition platform ahead of the deadline set in the rules. If a team makes multiple submissions during the same month, only the latest submission made before the deadline is retained. Prior submissions are thus superseded. If, for whatever reason (including technical issues), a team fails to make any submission within the set deadline for a reference period, no submission is taken into account for that team for the reference period. Waiting until the very last day to submit is thus a risky strategy for a team, as support might be unavailable to resolve any transmission or formatting issues. To avoid last-minute formatting or transmission issues, teams are thus encouraged to make an early ‘mock’ submission at least one week before the deadline of each reference period (to resolve any formatting or transmission issues early on). This submission would be superseded by the ‘real’ one submitted later on.

Team

Participation takes place in the form of a team of 1, 2, 3, 4 or 5 individuals. There are no restrictions on how participants organise themselves: private persons, persons working for private companies and persons working for public and/or research institutions are equally welcome to participate. Persons who work for organisations that are part of the European Statistical System (including Other National Authorities) can participate with certain limitations, which can be found in the Terms and Conditions section of each competition.

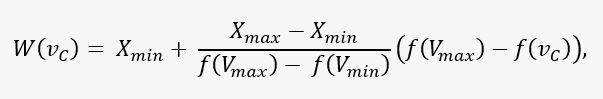

Volatility Index

A volatility index for a given country and a given European statistics value is a normalised measure of the volatility score. It is used to take into account the difficulty of nowcasting European statistics associated with the corresponding countries.

For each European statistics value, the volatility index for the countries is obtained by means of sorting the volatility scores, and normalising them by using a scaling function that maps the scores into a (semi-)linear order. The function used to scale is:

where vC is the country’s volatility score, Vmin and Vmax are the minimum and maximum country volatility scores, respectively, f is the scaling function, and Xmin and Xmax are the maximum and minimum values in which we map the country volatility scores. For all competitions, we use Xmin = 0.5 and Xmax = 2, and the natural logarithm is used as the scaling function f.

Note that the scaling function returns smaller weights for countries with higher volatility scores; this is to ensure (1) some level of normalisation of countries with high and low volatility rates, and (2) that teams take countries with higher volatility scores into consideration when preparing their entries.

For each country and each European statistics value, the volatility index will be fixed for the entire duration of the competition round and provided in the Evaluation section of each competition.

Volatility Score

The volatility score for a given country and a given European statistics value is a measure of the variability of the statistics’ associated time series calculated using historical data.

For each European statistics value, a country’s volatility score vC is computed based on the historical variability of its time series. Official data from the Eurostat data portal were downloaded and used to calculate the volatility scores:

- Dataset: Supply, transformation and consumption of gas - monthly data [NRG_CB_GASM] [G3000] Natural gas [IC_CAL_MG] Inland consumption - calculated as defined in MOS GAS [TJ_GCV] Terajoule (gross calorific value - GCV).

- Dataset: Supply and transformation of oil and petroleum products - monthly data [NRG_CB_OILM] [O4671] Gas oil and diesel oil [GID_CAL] Gross inland deliveries – calculated [THS_T] Thousand tonnes.

- Dataset: Supply, transformation and consumption of electricity - monthly data [NRG_CB_EM] [E7000] Electricity [AIM] Available to internal market [GWH] Gigawatt-hour.

To compute the country’s volatility score for a European statistics value, we base the scores on the GARCH(1,1) model applied to the available data from the period 2008-2022. The model is able to take the seasonality of the time series to adjust the calculated score. In case the volatility score cannot be calculated with a minimum assurance e.g. too short time series available, the country series is considered not in scope for the competition.